[Hackathon] Calories Showing – App helps to check calories from food image

![[Hackathon] Calories Showing – App helps to check calories from food image](https://homepage-media.s3.ap-southeast-1.amazonaws.com/wp-content/uploads/2023/03/20143340/calories_showing_featured_image.png)

Introduction

(https://www.worldbank.org/en/news/infographic/2020/01/29/the-crippling-costs-of-obesity)

(https://www.worldbank.org/en/news/infographic/2020/01/29/the-crippling-costs-of-obesity)

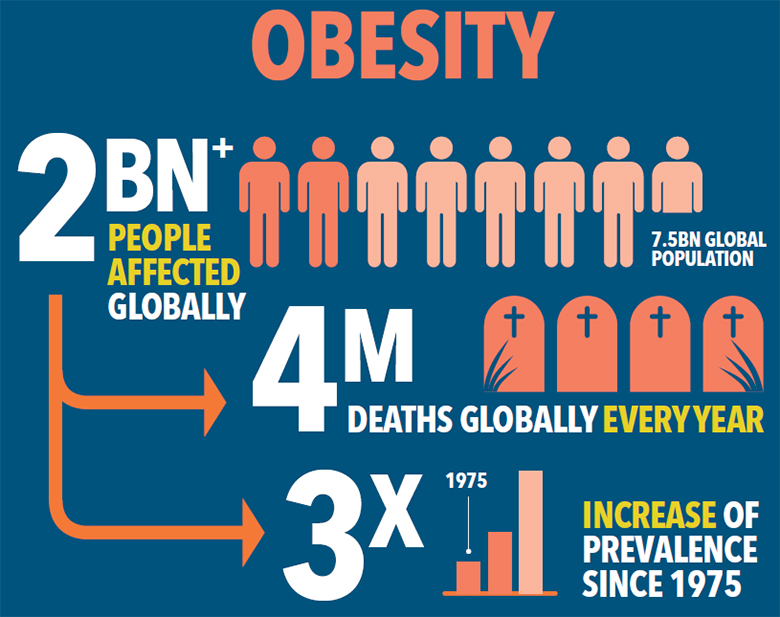

Today the world faces a double burden of nutrition including both undernutrition and obesity. In particular, the rate of obesity is increasing very rapidly. Recent data show that since 1975 obesity has nearly tripled and it now accounts for 4 million deaths worldwide every year. In 2016, over 2 billion adults (44 percent) were overweight or obese.

(https://www.azumio.com/blog/nutrition/healthy-eating-101-food-journaling)

Where overweight people are taking in too many calories, underweight people are not taking in enough calories to sustain them. Therefore, nutritionists recommend that each of us should make a daily calorie-in and calorie-out plan to control calories, thereby helping to improve malnutrition or prevent obesity and have a healthy body.

(https://atlasbiomed.com/blog/best-food-diary-guide-2020/)

Today, under the rapid development of technology, especially AI (Artificial Intelligence) technology, its application for daily life is an inevitable trend. And applying it to Fitness/Healthcare is no exception.

The above is the main idea for our team to develop the Calories Showing app. The function of the app is that from the food image taken with the phone camera, it will tell you the calories of that food, thereby helping you to adjust your calorie intake.

Calories Showing app

Technologies

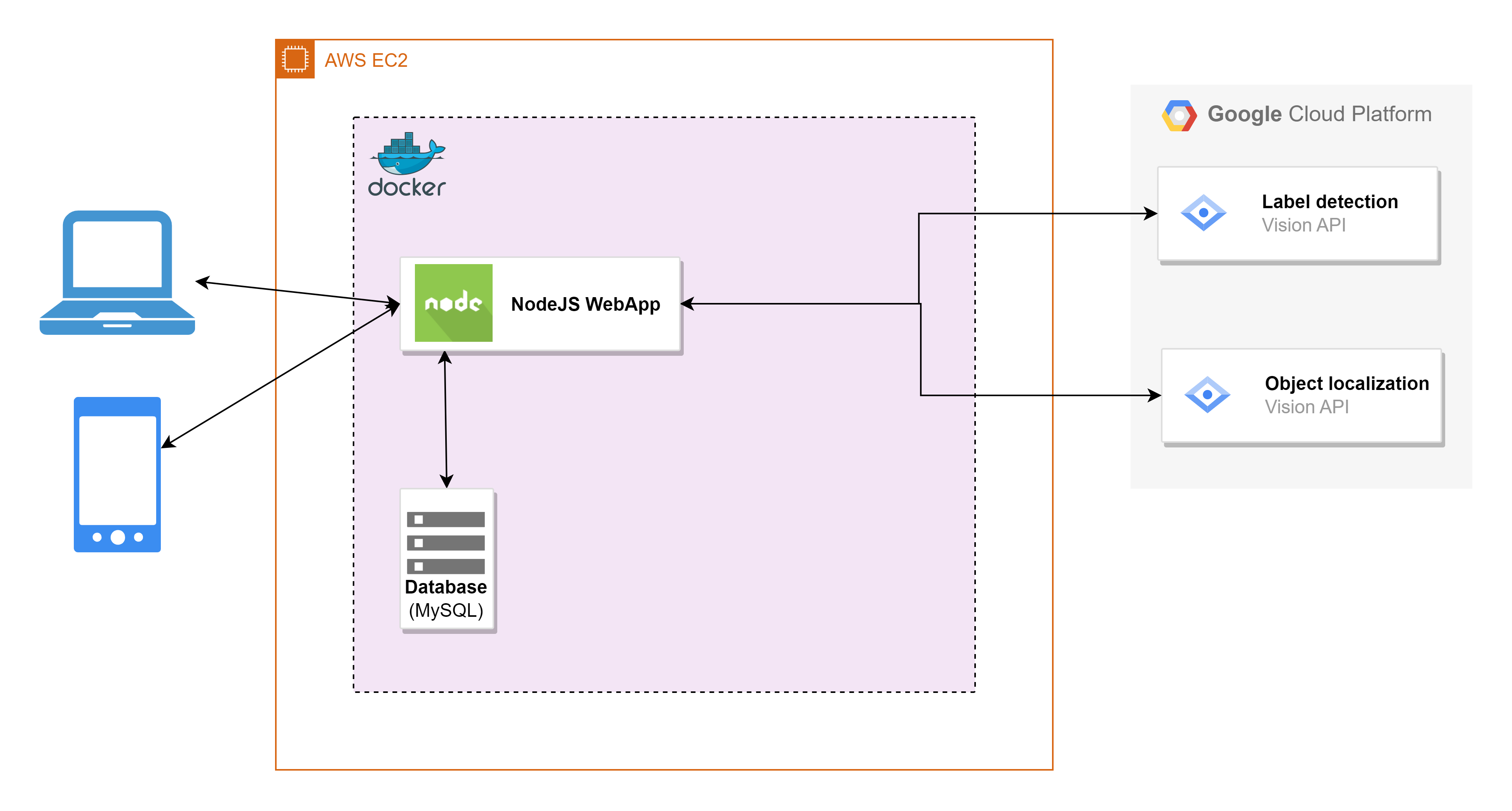

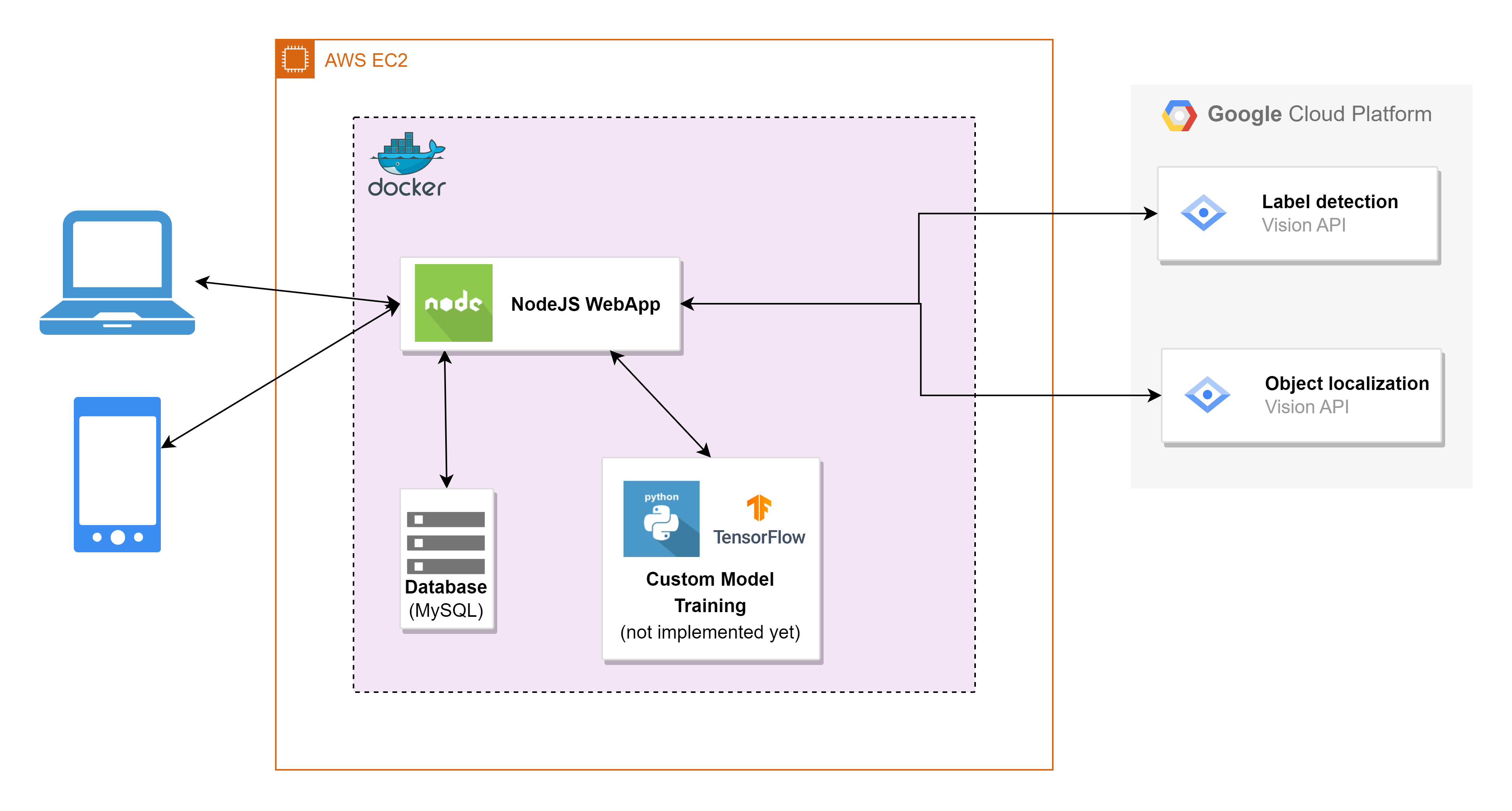

To facilitate use on both computer and mobile devices, my team decided to build a WebApp using Javascript (NodeJS) language. In addition, we use MySQL to store calorie data, as well as to facilitate further development of functions (for example, saving user information).

To make it easier to deploy, as well as easy to extend later, we also use Docker for the WebApp, MySQL parts.

Google Cloud Vision

The Google Cloud Vision API offers powerful pre-trained machine learning models through REST API. Help developers easily integrate into their applications features such as object detection, face recognition, image recognition, classification, labeling and text extraction of printed text or handwritten text.

In the project we use 2 functions provided by Google Cloud Vision API:

- Label Detection: detect and extract information about entities in an image, across a group of categories.

- Object Localization: detect and extract multiple objects in an image

From the results returned from Google Cloud Vision and the data collected from the database, the system will return the calories of that food to the user.

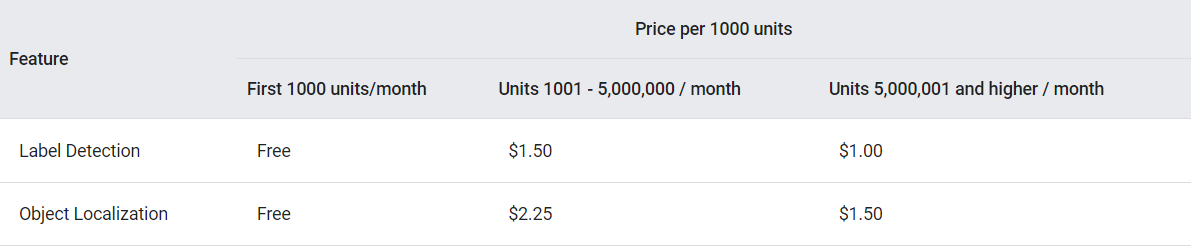

Price list of Google Cloud Vision

Suitable for small projects with a small number of users. As the number of users becomes bigger, the amount of money to pay becomes larger.

=> Therefore, should not be too dependent on the Google Cloud Vision but need an alternative solution (will be presented in the following section)

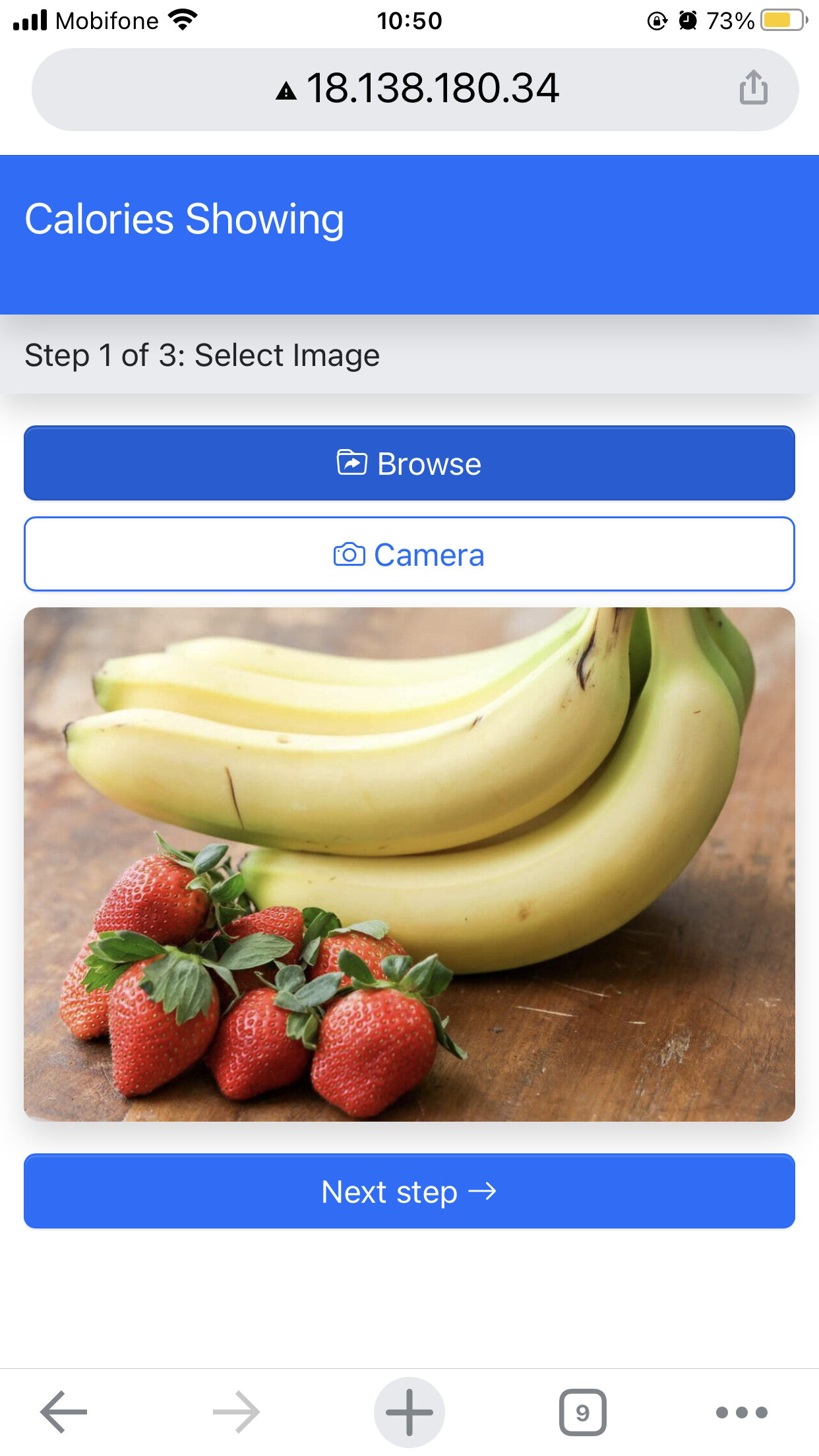

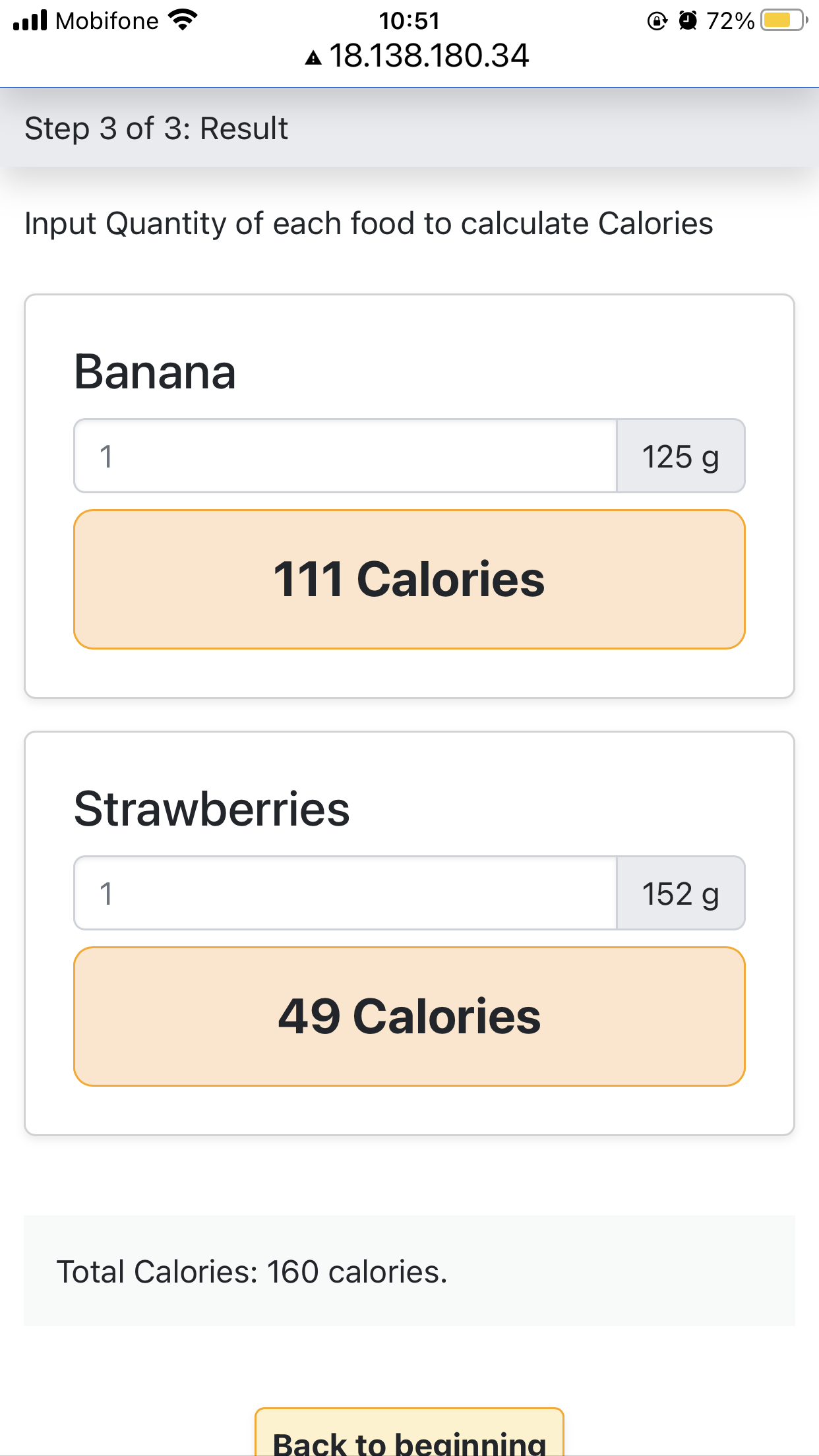

User Interface and functions of the app

Choose a image from your phone or a image taken from your phone camera

From the selected image, the AI will detect the food you have taken, in addition, you can also manually enter the missing food

Finally, the result screen, you can enter the amount of each food so that the system calculates the calories

Unresolved issues and future development directions

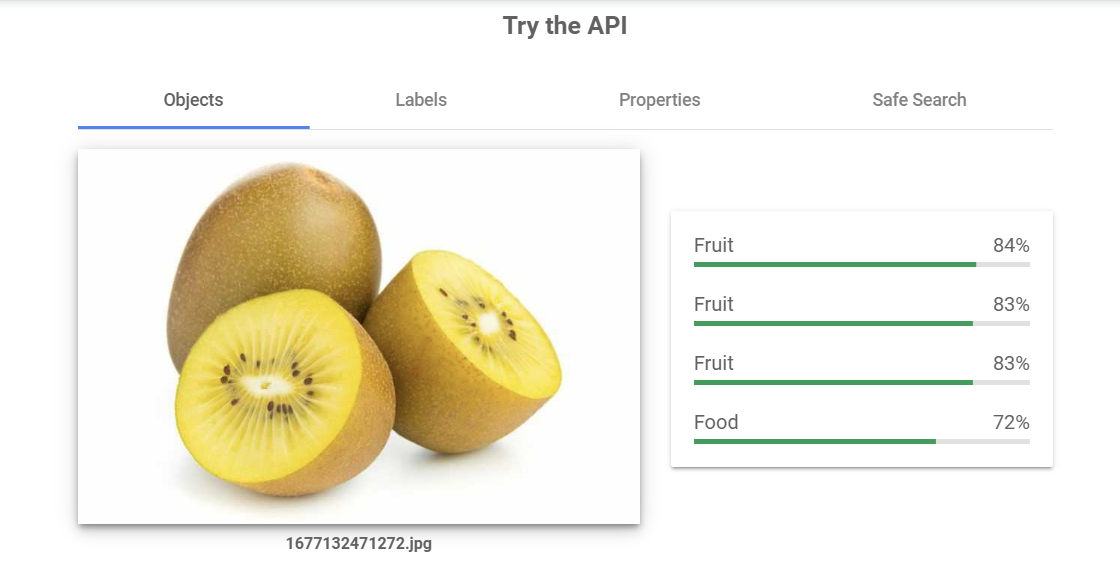

Low accuracy

Google Vision’s detection dataset is wide but not in-depth for food, so the accuracy is low.

For example, for the Kiwi fruit image, the results returned by Google Cloud Vision are very general: Fruit and Food.

To improve accuracy, the following solutions are available:

- Replace Google Cloud Vision with food-specific AI API services such as FoodAI, LogMeal API, .. The advantage is higher accuracy, similar ease of use to Google Cloud Vision. The downside is the high price and depending on the API provider, it is not possible to add new training data for other foods.

- Build and train our own food-specific Deep Learning model. The advantage is high accuracy, take control in technology, so we can manually add new training data for foods depending on needs. The disadvantage is that it takes time to research the most optimal model, it is necessary to have large enough datasets (labeled image data) to get high accuracy.

=> After discussion and deliberation, we decided to choose a combination of solutions: Build and train a food-specific Deep Learning model (Python, Tensorflow), along with that using Google Cloud Vision in parallel.

The combination of solutions will help:

- Partially reduced costs for Google Cloud Vision API

- Overcoming the initial limitation of the self-built model is that the accuracy is not high because the datasets are small and the optimal model has not been found.

- Separate the Deep Learning model building into a separate part, so as not to affect the building of additional features of the app.

- Once the self-built Deep Learning model has achieved the desired accuracy, we will remove Google Cloud Vision from the project flow.

Determine calories depending on the database

In case the food is not in the database, there is no way to select it (including manual input).

Solution: In case it is not detected, display a dialog asking the users if they want to use the uploaded image to help add it to the app’s database => If the user agrees, then proceed to save the image that the user has used to storage, from that image we will collect data about calories to save to the database, and collect datasets to train the Deep Learning model.

Future development directions

1/ Build more features to become a complete app. Additional features are expected to develop: register, log in, calculate the number of calories needed per day, low or over calories warning, plan to gain/lose weight,…

2/ Improve AI accuracy, build and optimize Deep Learning model.

Therefore, our team will be divided into 2 small teams. The team in charge of developing features for the app and the team building and optimizing the Deep Learning model.

References

https://www.worldbank.org/en/news/infographic/2020/01/29/the-crippling-costs-of-obesity

https://cloud.google.com/vision

日本語

日本語 Vietnamese

Vietnamese